Subscribe to Our Newsletter

Sign up for our bi-weekly newsletter to learn about computer vision trends and insights.

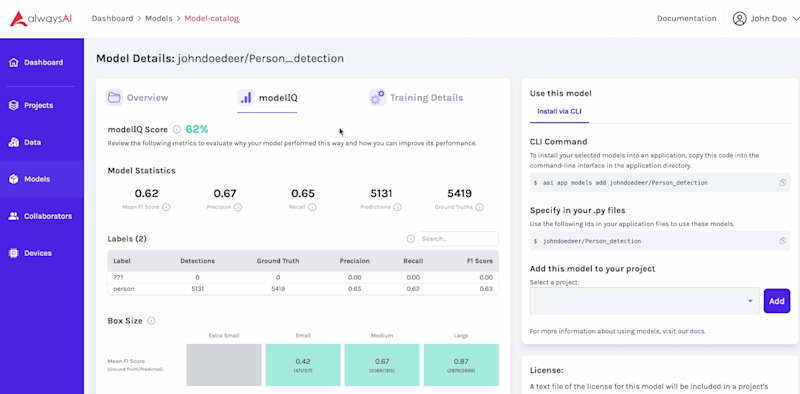

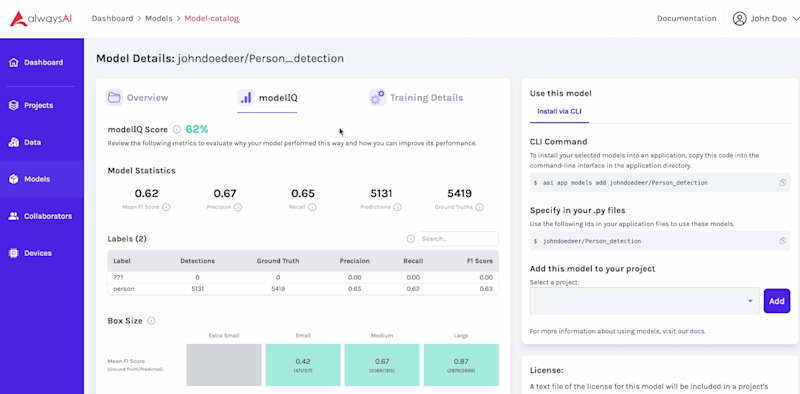

alwaysAI’s One-of-a-Kind modelIQ Tool Sets the Standards for AI Model Evaluation

by

Kathleen Siddell

Subscribe to Our Newsletter

Sign up for our bi-weekly newsletter to learn about computer vision trends and insights.

Kathleen Siddell